Introduction

The world doesn’t need another “Hello, World!” tutorial.

You know the ones I mean: those articles that promise to teach you in ten minutes how to build a web server in whatever the trendy programming language is this week. You copy and paste some code (typically all in just one file) and, voilà! You have a web server running on your laptop that replies to every request with a simple greeting.

These tutorials can get you started, but they also leave you with a false sense of accomplishment. You’ve learned practically nothing about building a real system. You’re left at the edge of a cliff, wondering how to:

- expand this to do something meaningful?

- design an easy-to-use API?

- properly structure the code so it doesn’t turn into a giant ball of spaghetti as it grows and changes?

- protect the system against attack?

- authenticate callers and authorize their requests?

- reliably perform asynchronous and scheduled work?

- notify clients about new things that happen on the server?

- structure your data and handle changes to that structure over time?

- ensure the authenticity and integrity of that data?

- deploy to production and upgrade the system without downtime?

- monitor the system and automatically generate alerts when something goes wrong?

- scale up to meet increased usage, and scale back down during lulls?

- allow the system to be extended by others?

In short, how do I build not just a toy, but a complete system? How do I build something as complex and scalable as a social media platform, or an online retail store, or a consumer payment system?

These tutorials, and the courses that use them, will teach you how to build such things. It will definitely take you longer to go through these than one of those “Hello, World!” tutorials, but you’ll also learn a lot more. If you’re taking one of my courses, you’ll also get to put them into practice, which is the best way to really learn the concepts and techniques. By the end, you’ll have a conceptual foundation upon which you can build a successful career as a systems engineer.

So let’s get started! First up: a tour of the various building blocks that make up just about any transaction processing system.

System Building Blocks

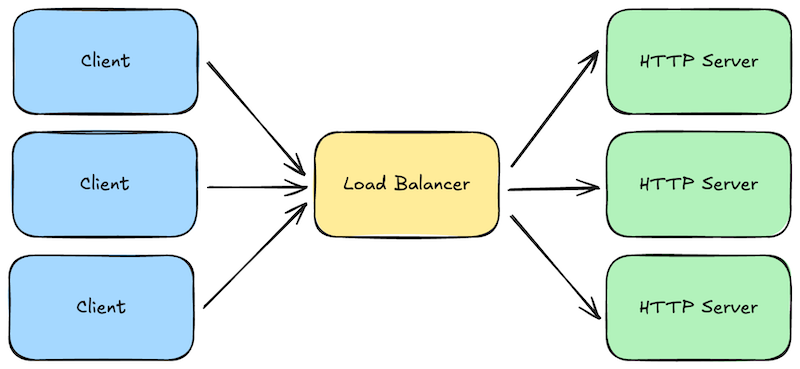

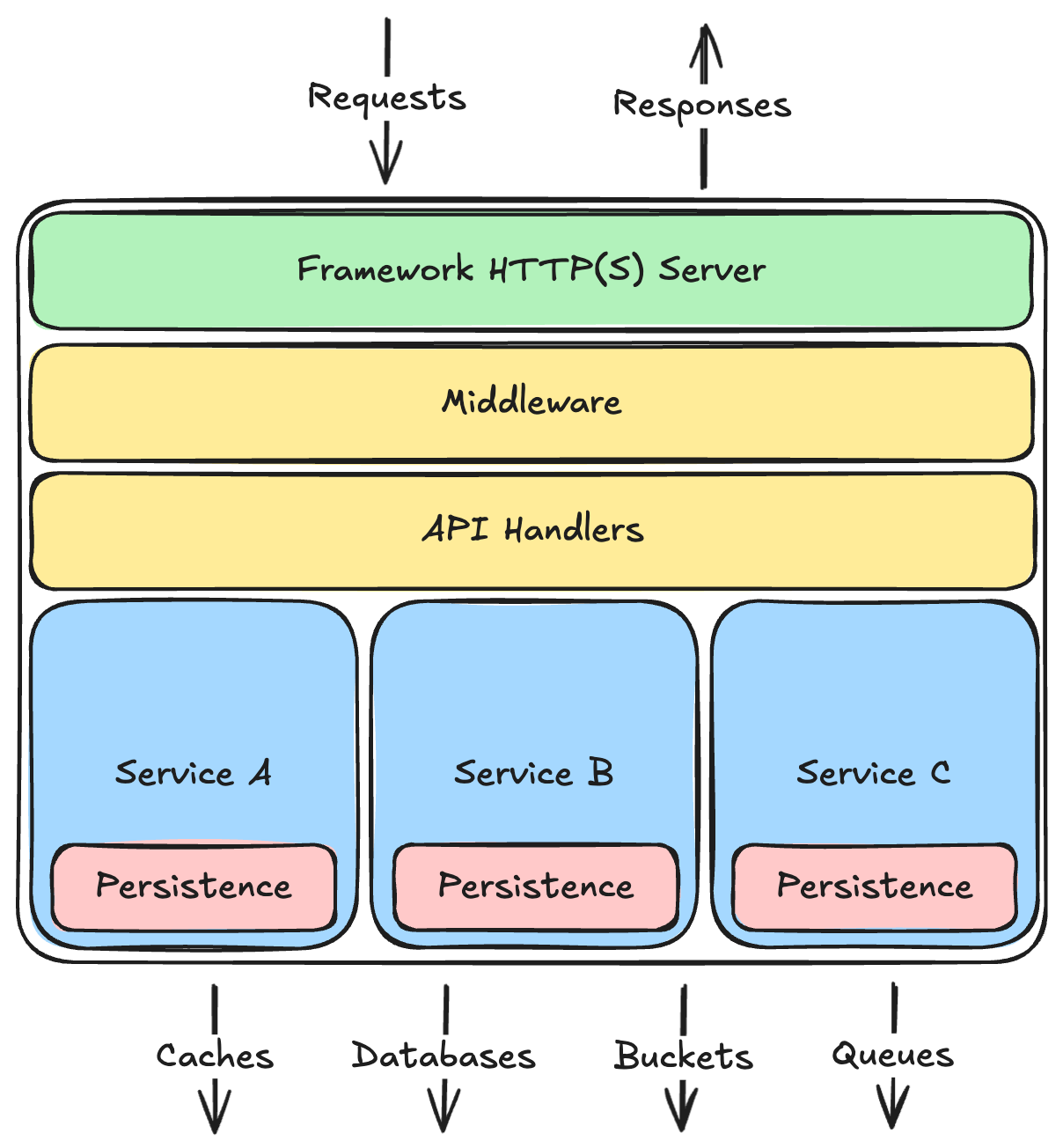

If you were to look at the architectures of the web sites you use most often, you would probably notice a lot of similarities. Most will have a set of HTTP servers, with load balancers in front, that respond to requests from clients. Those servers will likely talk to databases, caches, queues, and buckets to manage data. They might also use machine-learning (ML) models to make predictions. Event consumers will respond to new messages written to the queues, or changes to database records. Other jobs will run periodically to archive data, re-train ML models with new data, or perform other routine tasks. What these components do will no doubt vary from system to system, but the types of components used will come from a relatively small set of common building blocks.

This tutorial will give you an overview of these common building blocks. We will learn what each is typically used for, and some common options or variations you might see in practice. Once you understand these building blocks, you can combine them in various ways to build just about any kind of system.

Load Balancers and API Gateways

When a request is made to your web servers, either by a web browser or by a client calling your application programming interface (API), the first major system component to receive those requests is typically a load balancer. These are the front door to your system.

Load balancers are fairly generic components, meaning they are typically open-source software programs that can be used “off the shelf” with just a minimal amount of configuration. Popular examples include NGINX and HAProxy. Cloud providers also offer these as hosted services you can simply deploy—for example, AWS Elastic Load Balancer or Azure Load Balancer.

Load balancers perform several jobs that are critical to building highly-scalable and reliable systems:

- Load Balancing: Not surprisingly, the primary job of a load balancer is to distribute requests across a fleet of your “downstream” HTTP servers (i.e., load balancing). You configure the IP addresses for your domain to point to the load balancers, and each load balancer is configured with a set of addresses it can forward those requests to. The set of downstream addresses can then change over time as you roll out new versions or scale up/down. Balancers typically offer multiple strategies for balancing the load, from simple round-robin to more sophisticated ones that pay attention to how long requests are taking and how many outstanding requests each downstream server already has.

- Blocking and Rate Limiting: Load balancers also protect your downstream HTTP servers from attack and abuse. They are specifically designed to handle massive amounts of requests, and can be configured to block particular sources of traffic. They can also limit the number of requests a given client can make during a given time duration, so that one client doesn’t hog all the system resources.

- Caching: If your downstream HTTP servers mostly return static content that rarely changes, you can configure your load balancers to cache and replay some responses for a period of time. This reduces the load on your downstream servers.

- Request/Response Logging: Load balancers can be configured to log some data about each request and response so that you can analyze your traffic, or diagnose problems reported by your customers.

- HTTPS Termination: If your load balancer and downstream servers are all inside a trusted private network, and you don’t require secure/encrypted connections between your own servers, you can configure your load balancer to talk HTTPS with the Internet, but HTTP with your downstream servers. This used to be a common configuration when CPU speeds made HTTPS much slower than HTTP, but these days (in 2025) it’s common to just use HTTPS everywhere.

Load balancers are sometimes referred to as reverse proxies because they essentially do the reverse of a client-side proxy. Instead of collecting and forwarding requests from multiple clients within an organization’s internal network, load balancers collect requests from the Internet and forward forward them to downstream servers within your system.

Although load balancers can be used off-the-shelf with minimal configuration, many now support much more customized behavior via scripting. This customized behavior can be very specific to your use case, but common examples include:

- Authentication: If your servers and clients exchange digitally-signed authentication tokens, your load balancer scripts can verify the token signatures, and block obvious attempts to hijack sessions by tampering with those tokens. This reduces obviously fraudulent load on your downstream servers, saving resources.

- Authorization: Your scripts could also look up whether the authenticated user has permission to make the request they are making.

- Request Validation: If requests contain data that is easy to validate, you can perform those simple validations on your load balancers to block obviously invalid requests before they get to your downstream servers.

- Request Versioning: Sometimes you will want to change the API on your downstream servers, but you can’t force your existing clients to change their code, so you have to support multiple versions of your API at the same time. Your load balancer scripts can translate version 1 requests into version 2, and version 2 responses back into version 1 responses.

All of this custom functionality could occur within your downstream HTTP servers, but if you can move some of it into your load balancers, you can block obviously invalid requests early, reducing the load on your downstream servers. Load balancers are typically written in a high-performance language like C++ or Rust, so they can often handle much more load than downstream servers written in less-performant and scalable scripting languages like Python, Ruby, or JavaScript.

When load balancers become highly-customized for a given system, we often start to refer to them as API Gateways instead to reflect that they are specific to a particular Application Programming Interface (API). But they still serve the basic jobs described above, acting as the front door to the rest of your system.

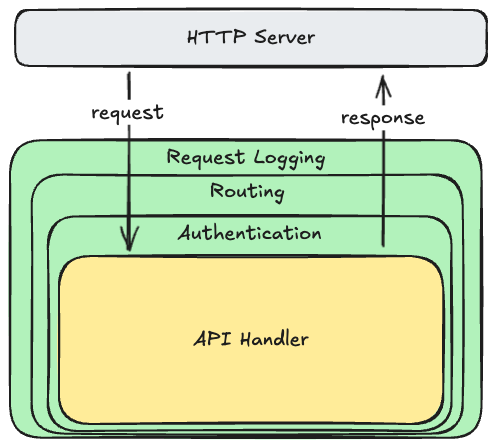

HTTP Servers

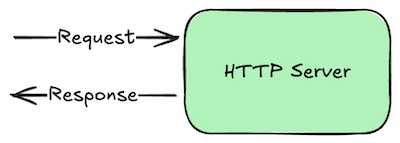

Load balancers forward requests to one or more HTTP servers. These are simply programs that run continuously, listen for incoming requests on a given network port number, and write responses.

What makes them HTTP servers in particular is that the requests and responses adhere to the Hypertext Transfer Protocol (HTTP) standard. This is a relatively simple request/response protocol, so it’s quite easy to support. Nearly every programming language has built-in or library frameworks for building HTTP servers.

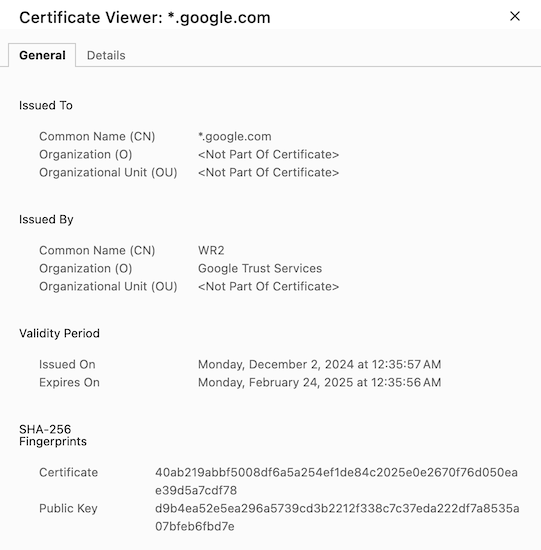

By default, HTTP is not encrypted, but we can add encryption by getting a digital certificate and supporting HTTPS, which stands for “HTTP Secure”. The core protocol remains the same, but the messages sent back and forth are encrypted using Transport Layer Security (TLS), and clients can use the digital certificate to verify that they are really talking to the server they think they are talking to.

Some HTTP servers are really just glorified file servers. Clients can request files from a specific directory on the server’s disk, and in some cases, the server might allow clients to update those files as well. These files are typically those that comprise the content of web pages: HTML, CSS, various image and video formats, and JavaScript.

But these days (in 2025) it’s more common to build HTTP servers that send and receive structured data instead of formatted content. For example, instead of returning a formatted HTML page showing the contents of your social media feed, these kinds of servers return just the data, encoded in an easily interpreted text-based format, such as JSON. Clients can then use that JSON in any way they want.

This decoupling is very powerful: it allows the same HTTP server to support many types of application platforms that render content very differently. For example, a web client can transform the JSON into HTML to display within a web page, while a native mobile app will put it into native UI elements. Scripts or AI models might only need the data itself, combining or transforming it before sending it somewhere else.

Because these kinds of HTTP servers expose a programming interface to applications, as opposed to files on disk, they are often referred to as API servers. Strictly speaking, they are “HTTP API servers” because they speak HTTP and not some other networking protocol, but HTTP is such a default choice these days that we typically leave off the “HTTP” part.

“Serverless” Functions

In a cloud computing environment (AWS, Azure, Google Cloud, etc.), API servers are usually deployed as a continuously-running virtual machine with a fixed amount of CPU and RAM. You pay for these machines as long as they are running, regardless of whether they are receiving requests. They are like always leaving the lights on in a room—that’s fine if people are always coming and going, but a bit of a waste if the room isn’t used very often.

If your API is only used sporadically, you can often save money by turning them into so-called “serverless” functions behind an API gateway. For example, on AWS you could use their API Gateway product to expose a set of APIs, and configure each to trigger a specific AWS Lambda function when requested.

When the API Gateway receives an HTTP request matching one of your configured APIs, it triggers the associated Lambda function, waits for its response, and transforms that into an appropriate HTTP response. The Lambda response can typically provide not only a status code and response body, but also various headers that should be included in the API Gateway’s response.

The API Gateway and your Lambda functions will scale automatically to handle just about any request load you might experience. But Lambda functions do tend to execute more slowly than a dedicated HTTP server. Lambda functions execute on AWS-managed servers, and those servers are also executing everyone else’s functions at the same time. Each server has only a fixed amount of resources, so your performance may vary depending on what the other functions are doing at the same time.

New server instances also need to download and initialize your Lambda function’s code on first execution, which can take a while if the code is particularly large or requires JIT compilation. This results in a cold-start problem, where the latency experienced by the client seems to spike on occasion for now particular reason. You might be able to avoid this using their SnapStart feature, but it currently has a lot of limitations.

But the good news is that you are only charged for the CPU and resources used by your Lambda function while it was actually running. If your API gets only a few requests per-day, you only pay for those few short invocations, not the rest of the time when the HTTP server was processing other requests for other people.

The economics of serverless functions, combined with their higher latency and cold start problem, imply that serverless functions can be a good choice for APIs that are used infrequently or sporadically. But if you are expecting many requests a second, at a more or less constant rate, and consistent performance really matters, then a continuously-running server is typically a better option.

WebSocket Servers

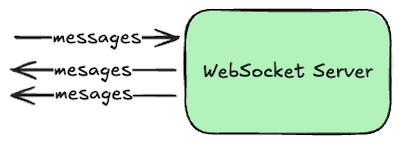

Although HTTP is built on top of lower-level network sockets, it remains a very simple request/response protocol. Clients can make requests to servers, and the servers can respond, but the server can’t tell the client about something without the client asking about it first. This is fine when your system only needs to answer questions posed by clients, but what if your system also needs to notify those clients about something they haven’t asked about yet?

In these cases we use the bi-directional WebSockets protocol instead, which allows either side of the conversation to send a new message at any time. Clients can still send request-like messages, and the server can send response-like messages in return, but the server can also send unsolicited messages to the client whenever it wants to. For example, the server might notify clients about new posts to a channel made by other clients, or that a long-running asynchronous job is now complete.

To get a WebSocket connection, clients actually start by talking HTTP and then requesting an “upgrade” to WebSockets. This allows a server to support both HTTP and WebSocket conversations over the same port number, which is handy when clients are behind a firewall that only allows traffic to the Internet over the customary web port numbers.

Once connected, both the server and the client can send messages to each other at any time. But either side may disconnect at any time as well, so your client and server code must be written to handle this. Typically we use queues and consumers in front of a WebSocket connection to ensure that all messages eventually get delivered to the other side. But if speed is more important than guaranteed delivered (e.g., multi-player games), just write directly to the WebSocket and discard messages that fail to send.

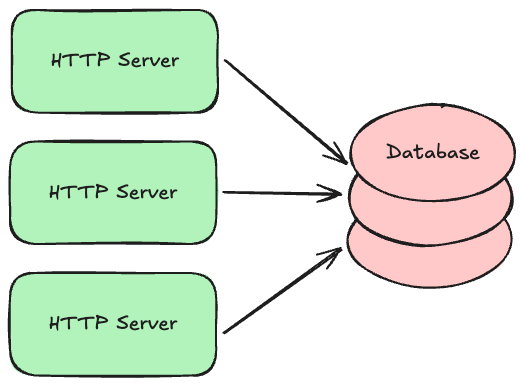

Databases

Most systems will eventually accept new data that you want to keep around for a while, and you typically need to ensure that data doesn’t accidentally get lost. The natural home for data like this is a persistent database with good durability guarantees.

There are several kinds of databases used to build transaction processing systems:

- Relational (SQL): The most common kind of database where data is organized into a set of tables, each of which has an explicit set of columns. Data can be flexibly queried by filtering and joining table rows together using common keys. Most relational databases also support transactions, which allow you to make multiple writes to different tables atomically. Common open-source examples include PostgreSQL, MySQL, and MariaDB. Most cloud providers also offer hosted versions of these, with automated backups.

- Document/Property-Oriented (aka “No SQL”): Instead of organizing the data into tables with explicit columns, these databases allow you to store records containing any set of properties, and those properties can in theory vary from record to record (though they often don’t in practice). In some systems you can only read and write a single document at a time given its unique key, but others support indexes and quasi-SQL querying capabilities. Common open-source examples include MongoDB, CouchDB, and Cassandra. Most cloud providers also offer their own hosted solutions, such as DynamoDB or Spanner.

- Simple Key/Value: Very simple but extremely fast data stores that only support reading and writing opaque binary values with a unique key. Common open-source examples include LevelDB and its successor RocksDB. These are currently just embedded libraries, so one typically builds a server around them to enable access to the database across a network.

Complex systems may use multiple types of databases at the same time. For example, your highly-structured billing records might be stored in a relational database, while your loosely-structured customer profile records might be stored in a document-oriented database.

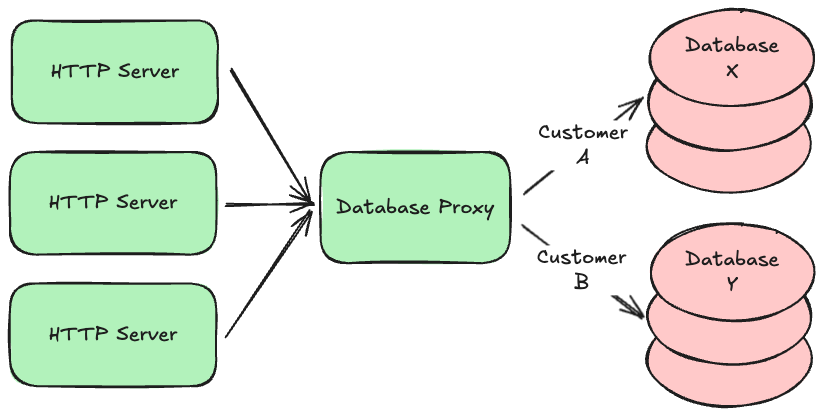

Regardless of which type you use, it is common to partition or “shard” your data across multiple database servers. For example, data belonging to customer A might exist on database server X, while data belonging to customer B might exist on database server Y. This allows your system to continue scaling up as the amount of data you store increases beyond what a single database server can handle. Knowing where a given customer’s data lives is often delegated to a component in the middle, similar to a load balancer or proxy, that directs requests to the appropriate locations. This allows you to change the partitioning strategy over time without having to update all your API servers.

Many of the hosted databases offered by cloud providers do this partitioning automatically—for example, both DynamoDB and Aurora automatically partition your data so that your data size can grow almost indefinitely. If you self-host your databases instead, look for an open-source partitioning proxy for your particular database engine (most have one).

Many database engines also support clustering where each partition consists of multiple physical servers working together as a single logical database. One server is typically elected to be the primary or leader. The others are known as secondaries, followers, or read replicas. Writes are always sent first to the primary, which replicates those writes to the secondaries. If strong durability is required, the primary will wait until a majority of the secondaries acknowledge the write before returning a response. If the secondaries are spread across multiple physical data centers, it then becomes extremely unlikely you will lose data, but your writes will take longer due to all the extra network hops.

Clusters also provide high availability by monitoring the primary and automatically electing a new one if it becomes unresponsive. The primary can then be fixed or replaced, and rejoin the cluster as a secondary. This process can also be used to incrementally upgrade the database engine software while keeping the cluster online and available.

Since all secondaries eventually get a copy of the same data, reads are often routed to the secondaries, especially if the calling application can tolerate slightly stale data. If not, the application can read from a majority of the secondaries and use the most up-to-date response. This keeps read traffic off of the primary so that it can handle a higher volume of writes.

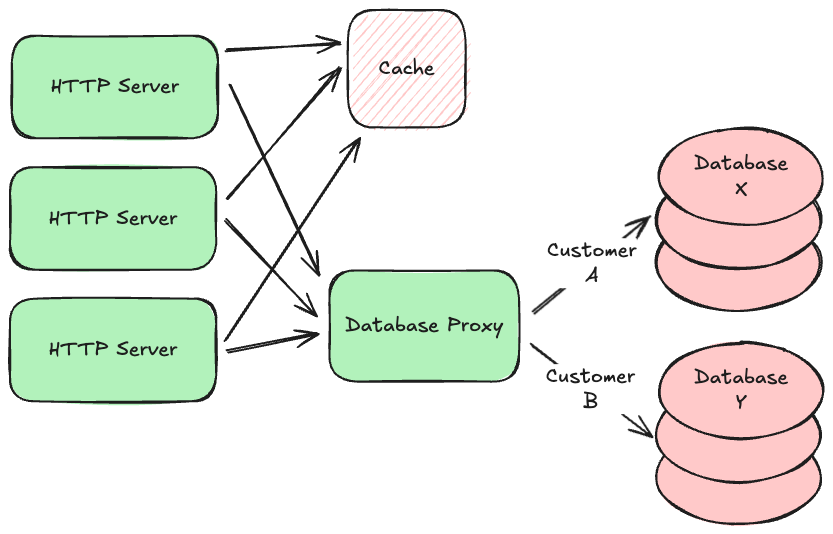

Caches

In some kinds of systems, requests that read data far outnumber requests that write new data. We call these read-heavy systems as opposed to write-heavy ones. Read-heavy systems often benefit from caches, which are very fast, in-memory, non-durable key/value stores. They are typically used to hold on to data that takes a while to gather or calculate, but will likely be requested again in the near future. For example, social media sites often use caches to hold on to the contents of your feed so that they can render it more quickly the next time you view it. Popular open-source caches include redis and memcached.

Using a cache is about making a tradeoff between performance and data freshness. Since the data in the cache is a snapshot of data from your persistent database (or some other service), that data might have changed since you put the snapshot in the cache. But if you know that data doesn’t tend to change very often, or if your application can tolerate slightly stale data in some circumstances, reading from your cache instead of the original source will not only be faster, it will also reduce load on that source.

The main tuning parameter for this tradeoff is the Time to Live (TTL) setting for each value you put in the cache. This is the time duration for which the cache will hold on to the data. After the TTL expires, the cache will “forget” the data, and your application will have to read a fresh version again from the original source. A short TTL will improve data freshness but decrease performance, while a long TTL will do the opposite.

If your HTTP server tries to load some data from the cache but it’s no longer there (known as a cache miss), getting that data will actually be slower than if you didn’t have a cache at all, as your server had to make an additional network round-trip to the cache before going to the source. This is why it’s never free to just add a cache. You should only add one when you are confident that it will improve your average system performance. Analyze your system metrics before and after the introduction of a cache, and if it doesn’t result in a noticeable improvement, remove it.

Buckets

Databases are fantastic for small structured data records, but they are a poor choice for large binary content like documents, pictures, video, or music. These kinds of files are often referred to as BLOBs or “Binary Large Objects” and the best home for them are buckets.

A bucket is like a file system that is hosted by your cloud provider. You can write files to a particular file path, read them given that path, and list paths given a common prefix. Examples include AWS S3, Google Cloud Storage, and Azure Blob Storage.

Buckets are designed to store large numbers of potentially huge files, but most lack the ability to store structured metadata about those files, so we often combine them with databases. For example, a social media system might store a structured record about your post in a database, but put the pictures or videos attached to your post into a bucket.

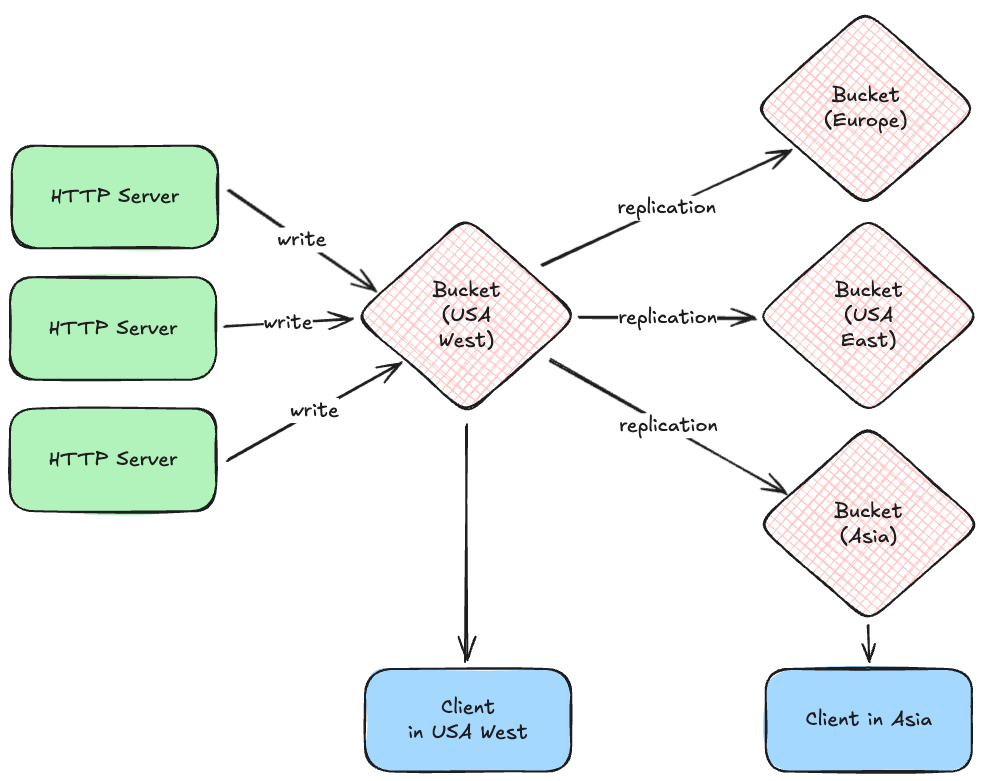

Most cloud providers give you the option of replicating bucket files between geographic regions, and obtaining region-specific URLs for files, so that your clients can quickly download them. For example, your system might write files initially to a bucket in a USA west coast data center, but those are quickly replicated to other regions, allowing a client in Asia to download the file from a much closer data center.

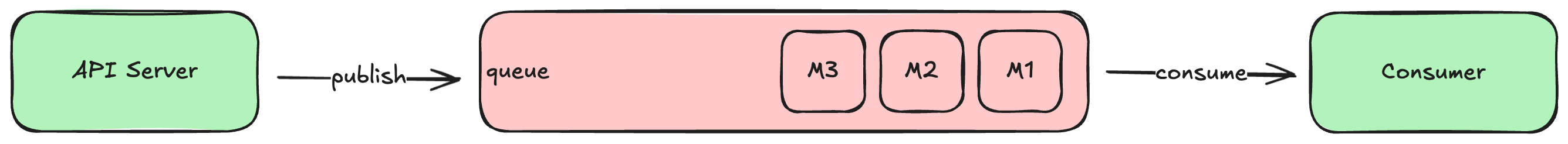

Queues and Consumers

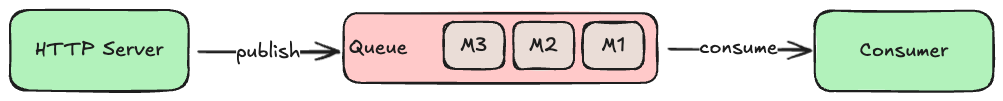

When your HTTP server processes a request, it will likely need to read or write some data synchronously (i.e., before responding to the client), but some work could be done asynchronously. For example, when a customer signs up for a new account, the HTTP server must write a new record in the accounts database, but sending a confirmation or welcome email could be done later. To ensure this task actually gets done, we typically use a queue.

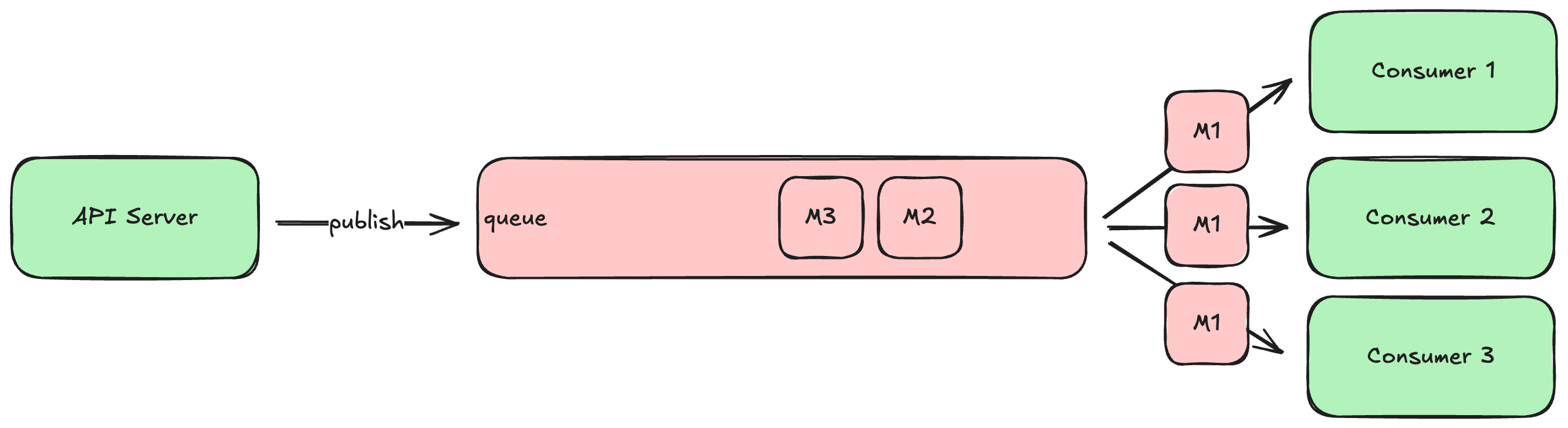

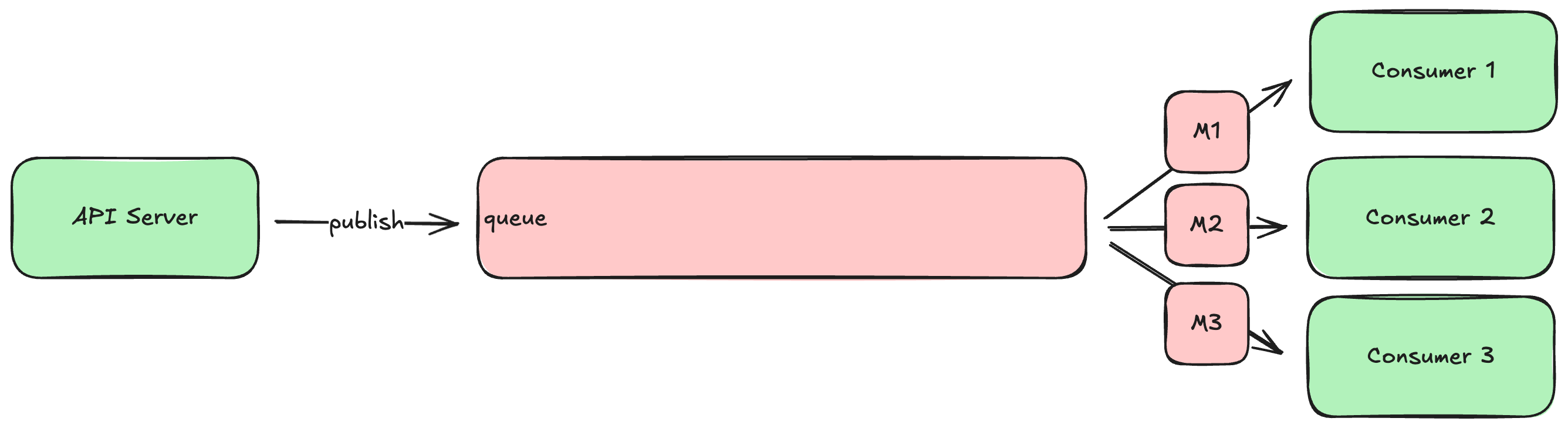

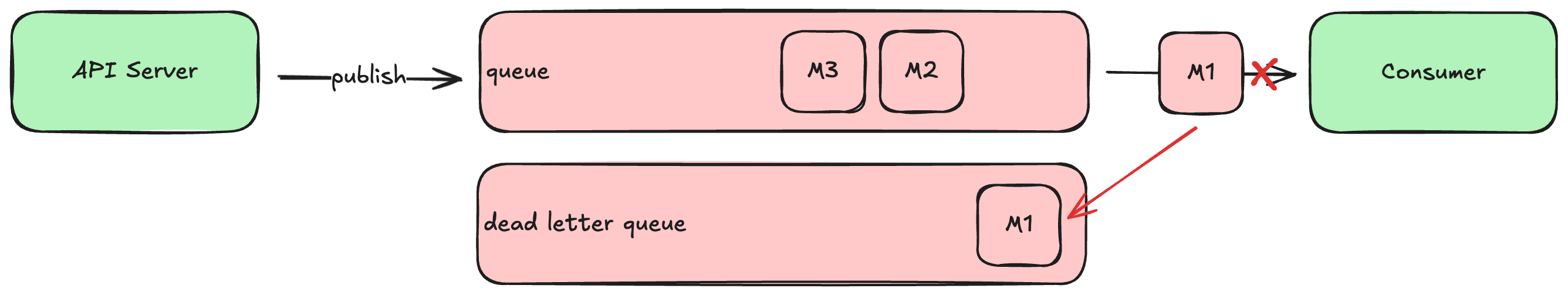

A queue is a special kind of database that tracks an ordered list of transient records, which are often called messages. Your HTTP server (or any system component) can publish new messages to the end of the queue, and other components consume those messages from the head of the queue, processing them in some way (e.g., send an email). If there are multiple consumers, queues can be configured to deliver each message to the first available consumer (job queue model), or each message to all consumers (broadcast model). Some queues will remove messages after consumers acknowledge successful processing, while others make consumers track where in the queue they would like to start reading.

Another common use of a queue is as a source for notifications that a WebSocket server should send to clients. For example, when a user makes a new posts to a social media system, the HTTP server processing that request will write the post to the database (and maybe attached media files to a bucket), and then publish a message about the post to a queue. The WebSocket servers then consume those messages from the queue, forwarding each message to all connected clients. This allows clients to immediately display the new post without having to ask the server for it.

Many queues will let you define multiple topics within the same server, which allows you to have multiple independent streams of messages. Different consumers can read from different topics: for example, you might define one topic for “new accounts” and another for “password resets” and have different consumers dedicated to each.

The most sophisticated queues also support running in clusters, similar to the database clusters discussed earlier. This allows the queue to remain available even when one of the servers goes down, or needs to be upgraded. It can also ensure durability by replicating new messages to a majority of the secondaries before responding to the publisher.

Common examples of open-source self-hosted queues are Kafka and RabbitMQ. Cloud providers also offer hosted versions of these products, or their own queuing solutions, such as AWS MSK or AWS SQS.

Periodic Jobs

So far all the system components we’ve discussed run continuously and react to things like synchronous requests or an asynchronous messages published to a queue. But in many systems we need other components that just run periodically, on a schedule. For example, payment systems need to generate clearing files of all approved transactions at least once a day and send them to the payment network. Or a system with an ML model might want to retrain that model once a week based on new observations.

These periodic jobs are just scripts that get executed by a scheduler. They start, run to completion, and exit. In between runs, nothing is executing except for the scheduler.

The schedulers primary job is to run jobs at the appropriate times, but most schedulers also record information about each run, including detailed log messages written by jobs as they execute. This allows operators to view whether jobs encountered errors, and diagnose the cause.

Examples of periodic job schedulers range from the simple yet tried and true cron to the very sophisticated and resilient Temporal.

ML Models

Many systems these days also use Machine Learning (ML) models to make predictions about the data they are processing, or the actions their users will likely take next. For example, a social media system might use ML models to predict tags for new images, or to screen new posts for malicious content. A payment system might use them to predict the probability of fraud, or the likelihood it will be declined.

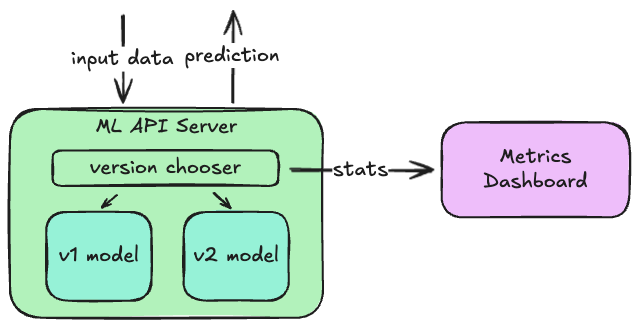

Sometimes these models can be run in-process with the main API servers, but it’s more common to host these in their own API server that are then called by either the main API servers or a message queue consumer. This is especially useful when you want to test new versions of a model: a small portion of requests can be routed to the new model, and its predictions can be compared to those made by the older model for the other requests. If the distributions of predictions is not what you expect, you can quickly switch back to the previous version without needing to update the callers.

Metrics and Logs

Unlike GUI clients, server-side components are pretty invisible on their own: you can make a request and observe the response, but you can’t really see the requests made by others or how well they are being processed. To make our system observable we publish metrics and logs from all the components. These are sent to, or collected by, other components that let us view these metrics on dashboard charts, or query the logs.

The most popular open-source metrics solution these days is Prometheus, which periodically gathers metrics from all your servers, aggregates and monitors them, and provides a query API to dashboard clients like Grafana. When metrics stray outside your established normal bounds, Prometheus can send alerts to your team via email, Slack, or PagerDuty.

Metrics can include machine-level things like CPU and memory usage, or API-level things like the percentage of error responses or the request latency distribution. When these metrics go out of tolerance, it’s likely a sign that something bad is happening, and an operator might need to intervene to keep the system running properly.

Log messages are typically written by a server to its standard output stream, and that can be read by various logging services. The most popular these days is Splunk, which provides a very sophisticated query language. API servers typically write log lines about every request they process, and every error they encounter, so that operators can get detailed information about what is happening and what went wrong.

Conclusion

Most systems you use every day are comprised of these basic building-blocks. Now that you know what they are and what to use them for, we can combine them to produce just about any sort of system we want to build!

Trade-Offs and Trapdoors

When you build a new system from scratch, you have to make a lot of decisions:

- Which programming languages should I use?

- Which framework should I use for my API server?

- Should I build “serverless” functions or continuously-running servers?

- Which database should I use?

- Should I build a web client, native mobile apps, or both?

- Which framework should I use for my web client?

- Should I build my mobile apps using native platform languages and tools, or using a cross platform framework?

It can be a bit overwhelming. Unfortunately, there is no one right answer to any of these questions beyond “it depends.” Perhaps a better way to say that is:

Every decision in software engineering is a trade-off that optimizes for some things at the cost of other things. And while some of these decisions can be easily reversed in the future, others are trapdoors.

This implies two questions to answer when making these kinds of decisions:

- What do I want to optimize for? What will actually make a difference toward achieving my goals, and what doesn’t really matter right now? Optimize for the things that actually matter, and don’t worry about the rest until you need to.

- How easily could this decision be reversed in the future if necessary? If it’s easy to reverse, don’t sweat it: make the appropriate tradeoff based on your current situation and move on. But some decisions are more like trapdoors in that they become prohibitively expensive to change after a short period of time. These can become a millstone around your neck if you make the wrong bet, so they require more consideration.

Let’s put these principles into practice by analyzing a few common trade-offs system builders have to make early on, many of which can become trapdoors. For each we will consider what qualities you could optimize for, and which would be most important given various contexts. For those that could be trapdoors, I’ll also discuss a few techniques you can use to mitigate the downsides of making the wrong decision.

Choosing Programming Languages and Frameworks

One of the earliest choices you have to make is which programming languages and frameworks you will use to build your system. Like all decisions in software, this is a trade-off, so it ultimately comes down to what you want to optimize for:

- Personal Familiarity: Founding engineers will typically gravitate towards languages and frameworks they already know well so they can make progress quickly. This is fine, but you should also consider…

- Available Labor Pool: If your system becomes successful, you will likely need to hire more engineers, so you should also consider how easy it will be to find, hire, and onboard engineers who can be productive in your chosen languages/frameworks. For example, the number of experienced Java or Python engineers in the world is far larger than the number of experienced Rust or Elixir engineers. It will be easier to find (and maybe cheaper to hire) the former than the latter.

- Legibility: Code bases written in languages that use static typing (or type hints) are typically more legible than those that use dynamic typing. This means that it’s easier for new engineers to read and understand the code, enabling them to be productive sooner. IDEs can also leverage the typing to provide features like statement completion, jump-to-source, and informational popups. Rust, Go, Java, Kotlin, and TypeScript are all statically-typed, while plain JavaScript is not. Languages like Python and Ruby have added support for optional type hints that various tooling can leverage to provide similar legibility features, but they are not required by default.

- Developer Experience and Velocity: How quickly will your engineers be able to implement a new feature, or make major changes to existing ones? Some languages simply require less code to accomplish the same tasks (e.g., Python or Ruby vs Java or Go), and some libraries make implementing particular features very easy. Static typing makes it easier to refactor existing code, and good compilers/linters make it easier to verify that refactors didn’t break anything. All of these lead to more efficient and happy engineers, which shortens your time-to-market.

- Runtime Execution Speed: A fully-compiled language like Rust, Go, or C++ will startup and run faster than JIT-compiled languages like Java and interpreted languages like Python or JavaScript. But this only really matters if your system is more compute-bound than I/O bound—that is, it spends more time executing the code you wrote than it does waiting for databases, network requests, and other I/O to complete. Most data-centric systems are actually I/O-bound, so the latency benefits of a fully-compiled language may or may not matter for them.

- Async I/O Support: The thing I/O-bound systems do benefit from greatly, however, is support for asynchronous I/O. With synchronous I/O, the CPU thread processing a given API server request is blocked while it waits for database queries and other network I/O to complete. With async I/O, the CPU can continue to make progress on other requests while the operating system waits for the database response. This greatly improves the number of concurrent requests a given server can handle, which allows you to scale up for less cost. Languages like Go and Node do this natively. Java, Kotlin, and Python have added support for it in the language, but older libraries and frameworks may still use synchronous I/O.

- Runtime Efficiency: Generally speaking, languages like Rust and C++ require less CPU and RAM than languages like Python or Ruby to accomplish the same tasks. This allows you to scale up with less resources, resulting in lower operating costs, regardless of whether you are using async I/O.

- Libraries and Tooling: Sometimes the choice of language is highly-swayed by particular libraries or tooling you want to use. For example, machine-learning pipelines often use Python because of libraries like NumPy and Pandas. Command-line interfaces (CLIs) like Docker and Terraform are typically written in Go because the tooling makes it easy to build standalone executables for multiple platforms.

- Longevity: Languages, and especially frameworks, come and go. What’s hot today may not be hot tomorrow. But some languages (C++, Java, Node, Python, Go) and frameworks have stood the test of time and are used pervasively. They are already very optimized, battle-tested, well-documented, and likely to be supported for decades to come. It’s also relatively easy to find engineers who are at least familiar with them, if not experienced in using them.

Which of these qualities you optimize for will probably depend on your particular context:

- Young Startup: If you are a young startup looking for product-market fit (PMF), you should definitely optimize for developer velocity in the short run. If you end up running out of money because it took too long to build the right product, it won’t matter which languages you chose. But if you do succeed, you will need to hire more engineers, which is when you want to optimize for legibility and available labor pool as well.

- Low-Margin Business: If you’re building a system to support a low-margin business, you might want to optimize for qualities that allow you to scale up while keeping operating costs low: async I/O, runtime efficiency, larger labor pool with cheaper engineers, etc.

- Critical-Path Component: If you are building a system component that needs to be very efficient and high performance, you should obviously optimize for those qualities over others like developer velocity.

- Open-Source Project: If you are starting a new open-source project, you might want to optimize for available labor pool and legibility, so that you can attract and retain contributors.

If you just want some general advice, I generally follow these rules:

- Favor statically-typed languages: The benefits of static typing far outweighs the minor inconvenience of declaring method argument and return types (most languages automatically work out the types of local variables and constants). Code bases without declared types are fragile and hard to read.

- Use async I/O when I/O-bound: If your API servers spend most of their time talking to databases and other servers, use a language, framework, and libraries that all have solid support for async I/O. This will improve performance and allow you to scale with less operational cost. But beware of languages where async I/O was recently bolted-on, as most frameworks and libraries won’t support it yet.

- Avoid trendy but unproven languages/frameworks: What works in a proof-of-concept may not work in production at scale. Choose languages and frameworks that have a proven track record.

When I build I/O-bound API servers or message queue consumers, I generally prefer Go or TypeScript on Node. Rust is very performant and efficient, and has a fabulous tool chain, but it’s difficult to learn so it’s harder to find other engineers who can be productive in it quickly. Java or Kotlin are also fine choices if you use a framework with good async I/O support (e.g., Reactor or Spring WebFlux). The same could be said for Python if you require the use of async I/O and type hints.

Regardless of which languages you choose, this decision will likely be a trapdoor one. Once you write a bunch of code, it becomes very costly and time-consuming to rewrite it in another language (though AI might make some of this more tractable). But there are a few techniques you can use to mitigate the costs of changing languages in the future:

- Separate System Components: Your API servers, message consumers, and periodic jobs will naturally be separate executables, so they can be implemented in different languages. Early on you will likely want to use the same language for all of them so you can share code and reduce the cognitive load. But if you decide to change languages in the future, you can rewrite the components incrementally without having to change the others at the same time.

- Serverless Functions: If it makes sense to build your system with so-called “serverless” functions, those are also separate components that can be ported to a new language incrementally. API gateways and other functions call them via network requests, so you can change the implementation language over time without having to rewrite the callers as well.

- Segmented API Servers: If you have a single API server and decide to rewrite it in a different language, you can do so incrementally by segmenting it into multiple servers behind an API gateway. Taken to an extreme, this approach is known as microservices and it can introduce more problems than it solves, but you don’t have to be so extreme. Assuming you are just changing languages, and don’t need to scale them differently, the segmented servers can still all run on the same machine, and communicate with each other over an efficient local inter-process channel such as Unix domain sockets.

Choosing Databases

Another critical decision system builders need to make early on is which kind of database to use. This also quickly becomes a trapdoor decision once you start writing production data to it—at that point, switching databases can get very complicated, especially if your system can’t have any downtime.

Like all decisions in software, choosing a database is all about making trade-offs. But unlike programming languages, the set of things you can optimize for tends to collapse into just a few dimensions:

- Administration vs Operating Cost: In the bad old days, we had to run our own database instances, monitor them closely, scale up and partition manually, and run periodic backups. As the amount of data grew, this typically required hiring a dedicated team of database administrators (DBAs). These days you can outsource this to the various cloud providers (AWS, Google, Azure) by using one of their hosted auto-scaling solutions—for example, DynamoDB, AWS Aurora Serverless, Spanner, or Cosmos DB. These typically cost more to run than a self-hosted solution, but you also don’t have to hire a team of DBAs, nor do any ongoing administration.

- Features vs Scalability and Performance: Very simple key/value stores like DynamoDB can scale as much as you need while maintaining great performance. But they also don’t offer much in the way of features, so your application logic often has to make up for that. Thankfully, cloud providers have recently made this trade-off less onerous by offering hosted auto-scaling relational database solutions that are quite feature-rich (e.g., AWS Aurora Serverless and Spanner).

- Database vs Application Schema: Relational databases have fairly rigid schemas, which need to be migrated when you add or change the shape of tables. Document-oriented databases like DynamoDB or MongoDB allow records to contain whatever properties they want, and vary from record to record. This flexibility can be handy, but the truth is that you must enforce some amount of schema somewhere if you want to do anything intelligent with the data you store in your database. If you can’t count on records having a particular shape, you can’t really write logic that manipulates or reacts to them. So the trade-off here is really about where (not if) you define schema and handle migration of old records. With relational databases you do this in the database itself; with document-oriented databases you do this in your application code.

Which side you optimize for on these trade-off dimensions will likely depend on your context, but these days (2025), using a hosted auto-scaling solution is almost always the right choice. The only reason to run your own database instances is if you are a low-margin business and you think you can do it cheaper than the hosted solution.

The auto-scaling relational solutions like Spanner or AWS Aurora Serverless also make the second trade-off less relevant than it used to be. These days you can get automatic scaling with consistent performance and have access to most of the powerful relational features: flexible queries with joins, range updates and deletes, pre-defined views, multiple strongly-consistent indexes, constraints, etc.

If you need those relational features, then that determines the last trade-off as well: schema will be defined in the database and migrations will typically be done by executing Data Definition Language (DDL) scripts. But if you don’t need relational features, you can define your schema and implement migration logic in your application code instead.

Although choosing a database is a trapdoor decision, you can make it easier to change databases in the future by using a layered internal architecture that isolates your database-specific code in the persistence layer. If you change databases, you only need to rewrite that layer—all the layers above shouldn’t have to change.

Moving the existing production data to your new database can be more challenging. If you can afford a small bit of downtime, you can shut down your API servers, copy the data to the new database, and deploy the new version of your API servers pointed at the new database. But if you can’t afford downtime, you can do dual-writes while you cut over to the new database—that is, you send inserts and updates to both the new and old databases to keep them in-sync while you shift which database is the source of truth. Once the cut-over is complete, you stop writing to the old database and only write to the new one.

Conclusion

Every decision in software engineering is a trade-off that optimizes for some things at the cost of other things. Some of those decisions can be easily reversed in the future, but some are more like trapdoors that become prohibitively expensive to change after a short period of time.

Making good decisions can be tricky, but if you focus on what you actually need to optimize for, as opposed to what would be nice to have, that will often lead you to the correct choice.

The Hypertext Transfer Protocol (HTTP)

The thing that defines the web more than anything else is its underlying communication standard: the HyperText Transfer Protocol (HTTP). If you want to build successful web applications, you need to understand this protocol and how it works. The good news is that it’s stupidly simple. One of the reasons the web grew as fast as it did is because the underlying protocol is clear, straightforward, and uncomplicated. Anyone can learn it in a matter of minutes, and once you learn it, you’ll understand what’s really happening when you browse the web, make HTTP requests from JavaScript, or handle those requests within an HTTP server.

Key Terms

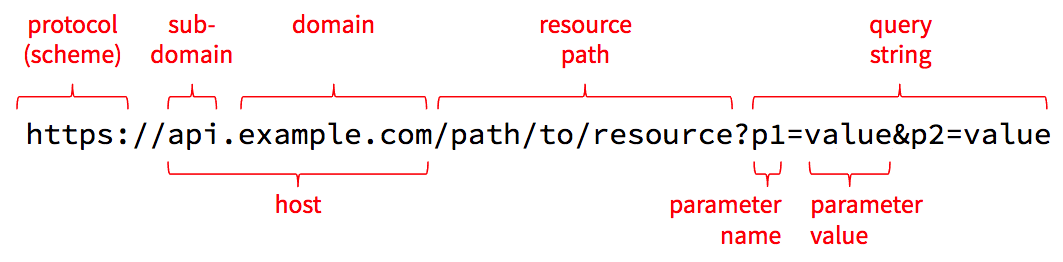

Before we look at the protocol itself, we need to review and solidify a few key terms. The best way to do that is to look at the anatomy of a URL. A URL is a string of characters, but it’s divided into a few distinct parts, each of which is used during an HTTP request.

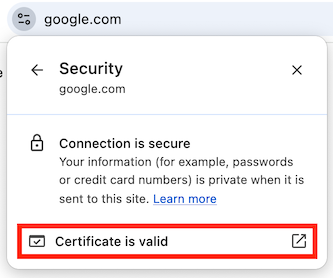

Protocol

The first part of the URL names the protocol to use, which is sometimes referred to as the scheme. The name http refers to HTTP, and https refers to a combination of HTTP and Transport Layer Security (TLS). When using TLS, all requests and responses are encrypted as they are sent across the network so that an attacker in the middle can’t read the contents. This results in a bit of computational and size overhead, but today’s computers and networks are fast-enough that HTTPS is quickly becoming the standard for all web traffic.

Never enter sensitive information into a web page where the URL starts with http, and never send sensitive data from JavaScript over an http connection. Anyone with access to the network can read everything sent over http in plain text. Make sure you use https when transmitting sensitive information.

Host

The next part is the host which is the name of the computer we want to talk to. The host can be a domain name such as example.com, or it can be a sub-domain like api.example.com. Domain names have to be purchased from domain registrars, but once you register one, you can create as many sub-domains as you like and adjust them whenever necessary.

To make a network connection, the client needs to translate the host name into a numeric IP address. It does this using the Domain Name System (DNS). The DNS is a bit like a telephone book that one can use to resolve a host name to an IP address, and you can access it right from the command line.

Open a new command-line window (Terminal on Mac or a Linux Subsystem on Windows) and type this command:

nslookup google.com

Sample Output (yours may differ)

Name: google.com

Address: 142.251.33.78

In addition to nslookup, Mac and Linux users can also use the more concise host command:

host google.com

Sample Output

google.com has address 142.251.33.78

google.com has IPv6 address 2607:f8b0:400a:805::200e

google.com mail is handled by 10 smtp.google.com.

The google.com host name resolved to just one IP address for me, but other domain names might resolve to multiple. For example, try microsoft.com instead. It should return several IP addresses, any of which can be used by a web client.

Mac and Linux users can also use the more powerful dig command to see details about the query sent to the DNS and its reply:

dig google.com

These commands talk to the DNS, but they also consult a hosts file on your local computer that contains well-known host names and their associated IP addresses. On Mac and Linux, this file is at /etc/hosts, and on Windows it’s at c:\Windows\System32\Drivers\etc\hosts. To see the contents of this file use this command:

# on Mac and Linux

cat /etc/hosts

# on Windows

cat c:\Windows\System32\Drivers\etc\hosts

You’ll probably have at least one line in that file that defines the host localhost to be the IPv4 address 127.0.0.1, and possibly another line that defines the IPv6 address to be ::1. These are known as loopback addresses because they just loop back to the same machine from which the request is made: your local computer. Thus, the host localhost is an alias for your computer.

Port

The host and associated IP address can get you connected to a server across the Internet, but that server might be listening for network requests on many different ports. You can think of an IP address like the street address of an apartment building, while the port number is the number of a specific apartment inside. To connect to a web server, we need both the host/IP and a port number.

As a convention, web servers listen on port 80 for unencrypted HTTP requests, and port 443 for encrypted HTTPS requests. If you don’t specify a port number in your URL, the browser will assume these conventional ports. But you can override this by including a port number in your URL, like so: http://localhost:4000/path/to/resource. This tells the client to connect to port 4000 instead of the conventional port 80.

Many Unix-based systems (including MacOS) do not allow non-root users to listen on ports lower than 1024, so when you want to build and test an HTTP server on your own machine, you typically need to listen on a higher port number like 4000. Your server will work exactly the same—you just need to include that port number when connecting to your server from a web browser or testing tool.

Origin

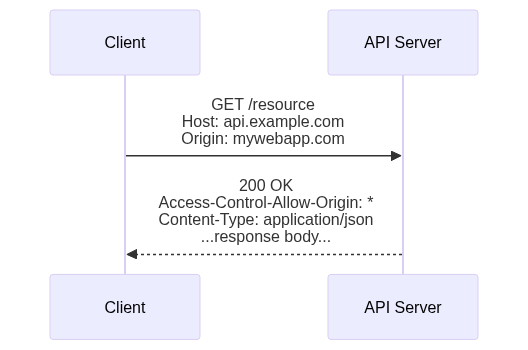

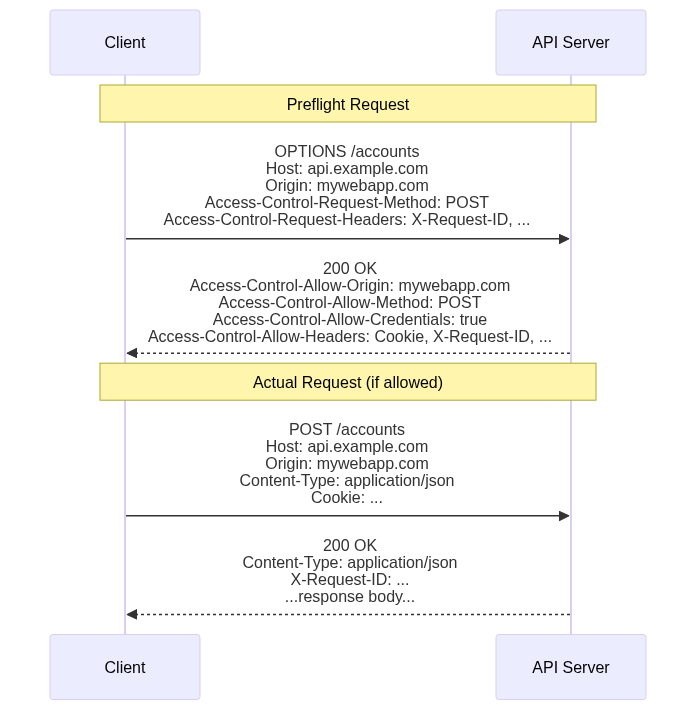

A quick aside: the combination of the protocol, host, and port defines an origin in HTTP. Origins are the primary security boundary within web browsers. Data written to local storage can be read only by code served from the same origin. Cookies are automatically sent during requests to the same origin from which the cookie came, but never to other origins. By default, HTTP requests initiated from JavaScript are limited to the same origin from which the JavaScript came (see the API Servers tutorial for more details on how to override this on the server).

Resource Path

After the host and optional port number, the segment up until the ? is known as the resource path. Technically, this can take any form that the server knows how to interpret, so it doesn’t strictly need to look like a file path, but that path syntax is useful for modeling any set of hierarchically-organized resources.

Although this looks like a file path, it’s critical to understand that it can refer to anything the server can manipulate: a file, a database table/record, an in-memory game state, an AI model, a connected device, or even a controller for a giant mechanical killer robot. The term “resource” is purposely vague and open-ended so that one can enable the manipulation of just about anything via HTTP requests.

Query String, Parameters, and Values

The last part of the URL above contains the query string, which allows the client to pass additional parameters and values that are relevant for the requested resource. These parameters are typically used only when getting the state of the resource, and they are often used to supply filters, sorts, or other options supported by the resource. For example, when getting the /accounts resource, which represents all user accounts in the system, one might supply a query string like ?q=dave&max=50 to find the first 50 users with the name dave.

The query string starts with a ? and is followed by one or more name/value pairs. The name/value pairs are separated by &. The name and value are separated by =. For obvious reasons, literal & and = characters within parameter names or values must be encoded as %26 and %3D respectively, and a literal % must be encoded as %25. The number after the % is the hex representation of the character’s Unicode number. The encodeURIComponent() function in JavaScript can be used to do this encoding in the browser, and similar functions are available in most other languages.

Technically speaking, - _ . ! ~ * ' ( ) and space must also be encoded, as well as characters outside the ASCII range, but most client libraries handle this for you, so you rarely need to worry about this.

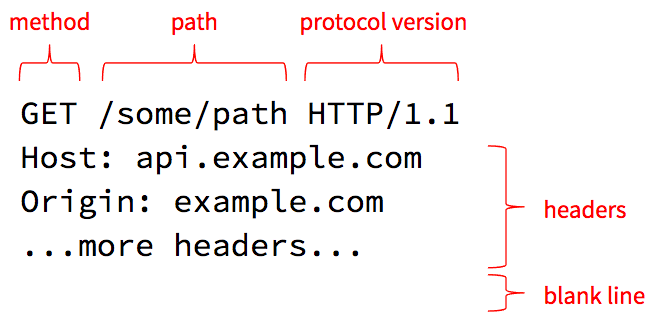

HTTP Requests

Now that we have our terms straight, let’s see how these URL elements are used in an HTTP request.

HTTP 1.1 requests are just plain text, so you can easily read and type them. The first line (simply called the “request line”) contains the method, resource path (which we already discussed earlier), and requested protocol version.

Methods and Resources

The core philosophy of HTTP is that clients invoke methods on resources. The resource is the object and the method is the verb. Or to put it another way, the resource path identifies a thing the server can manage, and the method specifies an action the server should take on that resource.

There are several methods defined in the HTTP standard, and the most commonly-used are as follows:

| method | meaning |

|---|---|

| GET | return the current state of the resource |

| PUT | completely replace the current state of the resource |

| PATCH | partially update the current state of the resource |

| POST | add a new child resource |

| DELETE | delete the resource |

| LINK | link the resource to some other resource |

| UNLINK | unlink the resource from some other resource |

| OPTIONS | list the methods the current user is allowed to use on this resource |

Servers may choose to support other methods, including custom methods they define. This is generally fine, but sometimes you can run into troubles if there is a proxy server in the middle that rejects requests with non-standard methods for security reasons. In that case, developers commonly use POST with a query string parameter or other custom header that indicates what the real method is.

Protocol Version

The request line ends with a protocol version the client wishes to speak. HTTP, like all standards, is an evolving one, and there have been a few versions of HTTP defined over the years. The example above uses HTTP/1.1 which is widely supported, but HTTP/2 was introduced in 2015 is now also widely supported.

By allowing clients to request a particular protocol version, servers and clients can start supporting the newer version while still being able to fall back to the older version if the other side doesn’t yet support the new version. For example, a client can request HTTP/2.0 but the server can reply saying it only supports HTTP/1.1. The client can then gracefully downgrade and use the 1.1 version for the rest of the conversation.

Headers

The next lines in the request specify one or more headers. A header is a name/value pair, like a parameter, that provides some additional meta-data about the request. The Host header is required on all requests and must be set to the host name the client thinks it is talking to. This allows a single server to host several different web sites at the same time: it can use the Host header to determine which site the client is requesting.

The HTTP specification defines several standard headers. The ones you will most commonly use when making requests are as follows:

| header | meaning |

|---|---|

| Authorization | Some sort of token that identifies an authenticated session or a user account. The server defines what it accepts in this header. |

| Content-Length | If you are sending content to the server, this specifies how many bytes you are sending. This is typically set for you automatically by the library you use to make the request. This tells the server how much data to expect so it knows when its done reading the request off the network. |

| Content-Type | If you are sending content, this specifies the MIME type you are using for that data (e.g., JSON, XML, HTML, or some sort of media type). |

| Cookie | A value the server provided in the Set-Cookie response header during a previous request. Cookies are handled automatically within the browser and by most HTTP client libraries that offer a “cookie jar” implementation. |

| If-Modified-Since | If set to a date/time, the server will respond with the resource’s state only if that state has been modified since the specified date/time. Useful when requesting large resources that don’t change very often (video, large images, etc). |

Let’s Try It!

As noted earlier, HTTP/1.1 is just plain text so you can manually type HTTP requests at the command line. So let’s do it! We will use the nc (netcat) command to manually send an HTTP request to Google’s web server and view the responses.

In your terminal, use the nc command to connect to port 80 on Google’s web server:

nc www.google.com 80

Now type the following two lines of text exactly as you see them below, and then hit Enter twice to send a blank line, which signals the end of your request.

GET / HTTP/1.1

Host: www.google.com

After you send the blank line it should respond with a standard HTTP response message followed by a bunch of HTML. That’s Google’s home page! You requested the resource path /, which is the home page for the entire web site.

Hit Ctrl+c to exit out of netcat and return to your own command prompt.

What you just did is what your web browser does when you enter http://www.google.com/ into the address bar. The browser parses the URL and determines that the protocol is http, the host is www.google.com and the resource path is /. It then opens a network connection to port 80 (default for HTTP) on www.google.com and sends at least those two lines (most browsers include a bunch more headers that provide the server with extra meta-data).

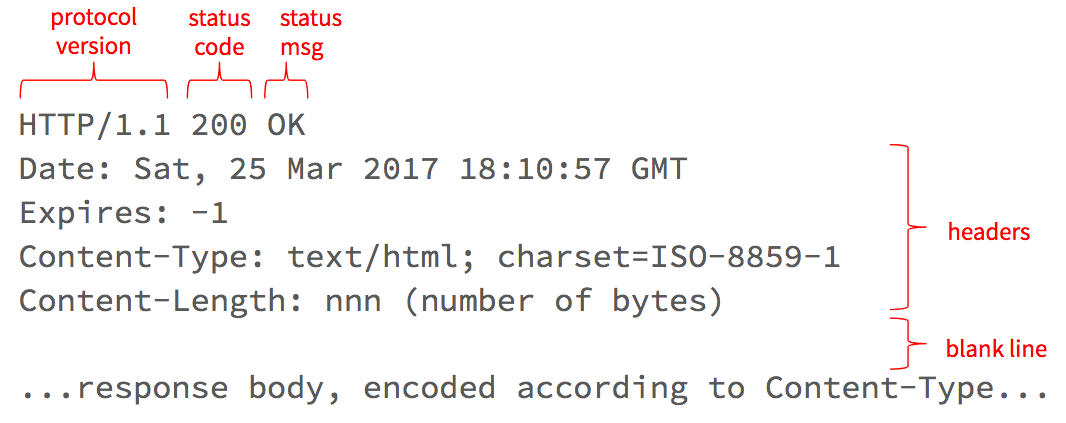

HTTP Responses

After making your request, the server will respond with a message that looks something like this:

The first line tells the client what version of the protocol the server is using, as well as the response status code and message.

Status Codes

The status code tells the client whether the request was successful or not. There are several status codes defined in the HTTP standard, but they bucket into the following ranges:

| range | meaning |

|---|---|

| 100-199 | still working on it, more to come |

| 200-299 | successful |

| 300-399 | request the URL in the Location response header instead |

| 400-499 | client made a bad request |

| 500-599 | something went wrong on the server-side |

Most HTTP client libraries will handle 100 and 300-range status codes automatically, so your client-side code only has to deal with 200, 400, and 500-range codes. In general, you should treat any code >= 400 as an error, but note that these error-range codes are distinct from a network error: if the host is unreachable you will get a network error and no response at all.

The message that follows the status code is mostly redundant, but it’s helpful in cases where a service defines new non-standard status codes, such as those defined in the now famous HyperText Coffee Pot Control Protocol (check out the meaning of status code 418).

Response Headers

Similar to requests, HTTP responses also contain one or more headers. These headers can provide additional meta-data about the response. The most commonly-used ones are as follows:

| header | meaning |

|---|---|

| Content-Type | The MIME type used for the data in the response body. For example application/json; charset=utf-8 means that the response body contains data encoded into JSON using the UTF-8 character set. |

| Content-Length | The number of bytes the server is sending in the response body. |

| Expires and Cache-Control | Specifies if and for how long the client may cache the response body. |

| Last-Modified | The date/time the resource was last modified (can be used in the If-Modified-Since header during subsequent requests for this resource if the server says its OK to cache the response). |

| Location | For 300-range (Redirect) responses, a URL that the client should ask for instead; for 201 (Created) responses, the URL for the newly-created resource. |

| Retry-After | The number of seconds, or a specific date/time, after which the client may ask for the resource. This is commonly returned when the server is throttling requests and the client makes too many within a given time period. The client should wait until the Retry-After time before making another request. |

| Set-Cookie | A cookie value that should be sent back in the Cookie header with all subsequent requests to this same origin. Cookies are handled automatically in the browser and by most HTTP client libraries that support a “cookie jar” implementation. |

Response Body

Following the response headers is the response body. For a GET request, this will be the current state of the requested resource, encoded in the MIME type specified in the Content-Type response header. The number of bytes in the response body is indicated by the Content-Length header. If the server doesn’t know the final total size, but still wants to start writing the data is has, the server can use chunked transfer encoding, which involves writing chunks of data at a time, each proceeded by a content length.

HTTP/2

The examples so far have been in version 1.1 of HTTP, which uses simple plain-text, human-readable messages. Although these plain text messages make the protocol easy to see and understand, they are not as efficient as a more-compact, binary protocol would be. These inefficiencies led many browser and server vendors to experiment with binary protocols (e.g., SPDY), which eventually became the basis for a new version 2.0 of HTTP, known as HTTP/2. This version is now supported by all the major browser vendors, as well as more recent web server frameworks.

Although HTTP/2 is no longer human-readable, it still retains all the same concepts outlined in this tutorial. The developer tools within the browsers will still show you all the request and response headers, bodies, and status codes, but on the wire they are encoded into a very company binary format. This should increase the overall speed of requests/responses, while also reducing the number of bytes that have to be transmitted across our networks.

Stateless Protocol

HTTP differs from older internet protocol in an important way: it is stateless. You might find that odd given that GET requests return the “current state of the resource,” but the state we are talking about here is not about the resources passed back and forth, but the network connection itself.

In older protocols like FTP, clients connect to servers and then execute commands similar to those you execute at the command line on your local computer. For example, you can cd to a different directory and then all subsequent commands executed on that same FTP connection will be interpreted by the server relative to that new directory. But that also implies that all subsequent requests must be sent to the same server, as only that server knows what the current directory is.

With HTTP, there is no state maintained about the connection in-between requests. Each request is processed independently from all other requests sent on the same connection. This is what we mean by “stateless.”

This keeps HTTP very simple, but it also allows it to scale. If requests sent by the same client are independent of each other, they can be routed to different servers on the backend by load balancers. As we get more and more concurrent requests, we can simply increase the number of those downstream servers, and maybe add another load balancer. This is known as horizontal scaling because we are increasing the number of servers, not the size of the existing servers. Horizontal scaling is possible precisely because HTTP is a stateless protocol.

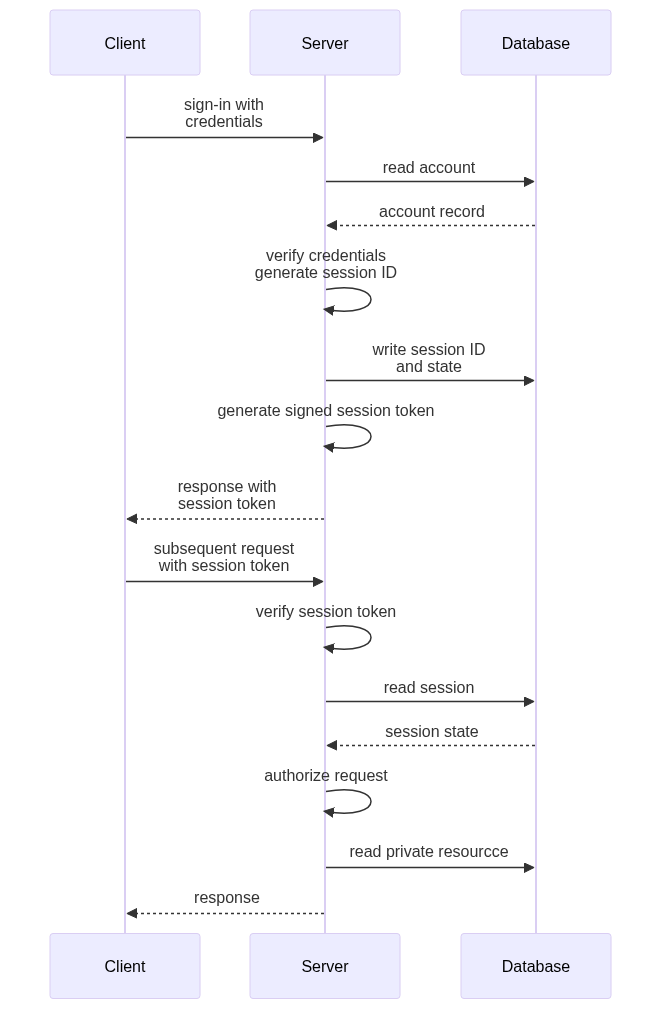

But like all things in software, this stateless quality is a tradeoff: it enables horizontal scaling, but it makes other things much more difficult. For example, supporting authenticated sessions—where a user signs-in during one request, and then accesses private resources in subsequent requests—is tricky when requests are independent and could be routed to different servers on the backend. We will return to this when we discuss Session and Authorization Tokens.

Environment Variables

The bash shell is really a programming language interpreter in disguise. Whenever you execute something at the command line, you are really running a small program. These programs typically launch other programs, but there’s quite a bit of interpretation that can occur before those programs are executed.

One of the things that gets interpreted by the bash shell are environment variables. Environment variables are just like variables in other languages:

- they have a name

- they have a scope that determines where they are visible and where they are not

- they can be assigned a value and that value can change over time

- they can be used in expressions where you want the current value of the variable to be used

Declaring and Using Environment Variables

Open a new command-line (terminal) window, so you can follow along as I explain the various commands. To declare a new environment variable that is visible to your current command-line shell and any programs launched from it, use this simple syntax:

export MY_VARIABLE="some value"

This creates a new environment variable named MY_VARIABLE set to the string "some value". The double-quotes around "some value" allow us to embed a space in the string while still treating it as a singular value.

To see the value of an environment variable, use the echo command and refer to the variable with a preceding $ like so:

echo $MY_VARIABLE

This will print the current value of the variable named MY_VARIABLE, which at this point is some value. Note that you use a $ on the front when you refer to the variable in a command; that way the shell knows you are referring to an environment variable, as opposed to a file or some other program.

When the shell sees the $ prefix, it “expands” the variable into its current value, and then continues processing the overall command. In this case, the shell expand this into echo "some value" and then runs the echo command passing that string as the first and only parameter. The echo command simply prints whatever you pass to it, so it prints some value and exits.

You can name your variables whatever you want, and you can actually use any casing you want, but we traditionally use all caps for environment variables, and an underscore to separate words. This keeps them visibly separate from the myriad of commands, programs, and files you can refer to at the command line, which are typically in lower-case.

Predefined Environment Variables

You can use environment variables in any command, and there are several that are already defined for you on most systems. For example, the environment variable $USER is typically set to the name of the currently signed-in user. You can check the value of this using that same echo command, but this time we can also add a little greeting:

echo "Hello, $USER"

Notice the use of double quotation marks. As noted above, these are used to wrap a string that might contains spaces, but note that you can also use environment variables within these strings. The variables will be expanded into their current value before the string is passed to the echo command. This expansion happens only when you use double-quotes; if you use single quotes, the $USER will be treated as literal text and not a variable to be expanded.

Another one that is typically set for you is $HOME, which is the file path to your home directory. You can use this with the ls command, just like you’d use any other file path:

ls $HOME

That will list all the files in your home directory. If you want to change to your home directory, use that same variable with the cd command:

cd $HOME

Since this is such a common operation, most shells provide the shorter ~ symbol as a synonym for the $HOME variable:

# this changes to your home directory

cd ~

# and this lists the files in the Documents folder in your home directory

ls ~/Documents

# and this changes back to whatever directory you were in last

cd -

The lines above that are prefixed with # are comments, just like comments you add to your Java or JavaScript source files. Anything following a # character on the same line will be ignored by the command-line shell.

The PATH Variable

The other critical environment variable that is already set for you is the $PATH variable, which determines which directories the shell looks in for programs that you try to execute at the command-line. You can see your current path using that same echo command:

echo $PATH

Your output will likely contain several directories, separated by :. The shell will look in each of those directories, in the order specified, to find the bare commands you execute. For example, the ls command ran above is actually a small executable that lives in a directory included in your $PATH. To find out which one, use this command:

which ls

You can adjust the PATH variable by resetting it, but it’s more common to add to it by referring to its existing value. For example, to add a new directory to the end of your path, you can use a command like this:

export PATH=$PATH:$HOME/bin

The shell will first expand $PATH to be the current value of the PATH environment variable, and then expand $HOME to be the current value of the HOME environment variable (your home directory). So in total, this command will add the bin directory within your home directory to the path.

But that change will only affect the current command-line shell window. To make that change persistent across all shell windows you might open, we first need to understand the scoping rules for environment variables.

Scoping Rules for Environment Variables

When you declare a variable inside a function in languages like Java or JavaScript, that variable is visible only inside that function. We call that the variable’s “scope.” Environment variables have a scope as well, and understanding their scoping rules will help you realize why some environment variables are visible in every command line shell, while others are not.

When we declared a variable above, we used the keyword export in front of the variable name. This sets the variable’s scope so that it is visible in the current command-line shell, and any other program launched from that shell. If you omit the export keyword, the shell will create the variable, but it will be visible only in the current shell, and not in any other program launched from that shell. Unexported private variables can be useful at times, but we typically create environment variables so that other programs can read them, so you will most often use export when declaring a new environment variable.

But even if you use the export keyword, the variable you declare won’t be visible to another command-line shell that you start from your desktop. To see this in action, start another command-line (terminal) window and type that echo command again:

echo $MY_VARIABLE

Since this is a new and different shell from the one in which you declared the variable, you won’t be able to see this MY_VARIABLE variable, so the echo command should return only a blank line. Unlike other programming languages, it won’t generate an error when referring to an unset environment variable—instead, it just expands that variable to an empty string and invokes the echo command with an empty string argument.

To declare a variable that is visible in every command-line shell you open, we need to declare it at a higher scope. How you do this depends on which operating system you are using.

Declaring Persistent User-Global Variables

Now that we understand the scoping rules for environment variables, I can now explain how to set persistent variables that are global for the current operating system user. Follow the instructions below for your particular operating system.

MacOS and Linux

Both MacOS (formerly OS X) and Linux are based on Unix, and both use a derivative of the original Bourne shell. MacOS now uses zsh and Linux distros tend to use bash.

Shells derived from Bourne have a handy feature where they will execute a script each time you open a new Terminal window, or whenever you sign-in to an account via the command-line (e.g., ssh or su). These scripts are simply text files containing commands you would have normally typed manually at the command-line. The shell will execute them silently before you see a prompt.

On MacOS, zsh will run the script stored in ~/.zshenv whenever you open a new Terminal window, or whenever you sign-in to an account via the command line. On Linux, bash will run the script in ~/.bashrc when you open a new Terminal window, but will run the script in ~/.bash_profile when you sign-in to an account at the command line.

Since these scripts are run every time you open a new Terminal window, we can use them to declare persistent environment variables that will be available in all new Terminal windows we open.

If you’re on a Mac, open ~/.zshenv in your favorite text editor. On Linux, open ~./bashrc. If you installed Visual Studio Code and enabled the code shell command, you can open the file in VS Code using this command:

# on Mac

code ~/.zshenv

# on Linux

code ~/.bashrc

These scripts are just simple text files containing commands you would have normally typed manually at the command-line. These commands are run sequentially when you start a new Terminal, as if you typed them yourself. So to create an environment variable that gets declared every time you open a new Terminal window, just add the variable declaration to the file using the same syntax you used at the command-line:

# new line inside ~/.zshenv (Mac) or ~/.bashrc (Linux)

export MY_VARIABLE="some value"

Save the file, open a new Terminal window, and then echo $MY_VARIABLE. You should now see the value in the new Terminal window, and any other Terminal window you start from now on.

To undo this, just re-edit ~/.zshenv (Mac) or ~/.bashrc (Linux) and remove that variable declaration. After you save, all new Terminal windows will no longer have that variable set.

Editing these files has no effect on existing Terminal windows because that script is run just once when you first open the Terminal, but you can re-run the script at any time using the source command:

# re-run the start-up script in the current shell

source ~/.zshenv

This is handy whenever you add or change an environment variable, and want that value available in your current Terminal window. It’s such a common operation that the shell also provides the . symbol as a shorter synonym for the source command:

# same as `source ~/.zshenv`

. ~/.zshenv

Windows

If you are using Windows and want to work with command-line tools, I highly recommend using the Windows Subsystem for Linux (WSL). This allows you to use bash and all the various commands found in a typical Linux distribution. Once you activate the WSL, you can alter you .bashrc file just like you would on a Linux machine.

Unsetting Environment Variables

If you ever need to unset an environment variable that has been declared in your current shell, use the unset command:

unset MY_VARIABLE

Note that here you don’t use the $ prefix because you don’t want the shell to expand the variable into its current value. Instead, you want to pass the variable name itself to the unset command.

Just as when you declare variables manually in the current shell, this will unset the variable in the current shell only. All other shells remain unaffected.

Intro to Cryptography

If you want to design, build, or manage software services on the Internet, you need to understand at least the basics of cryptography. You don’t need to fully understand the math—few people do! And you should never implement the core algorithms yourself—instead always use the canonical library for your chosen programming language. But you do need to understand what these core algorithms are, what they can and cannot do, and how to combine them to create secure systems.